Day 34: Bias/Variance and Neural Network

- eyereece

- Nov 3, 2023

- 1 min read

In our previous post, we see how by looking at our training error and cross validation error, we can try to get a sense of whether our learning algorithm has high bias or variance, but if a model has a high bias or high variance problem, what do we do about it?

There are few things we can try to make our model perform better, to fix high variance issue, we can try:

get more training examples

try smaller sets of features

try increase regularization

To fix high bias issue, we can try:

try getting additional features

try adding polynomial features

try decreasing regularization

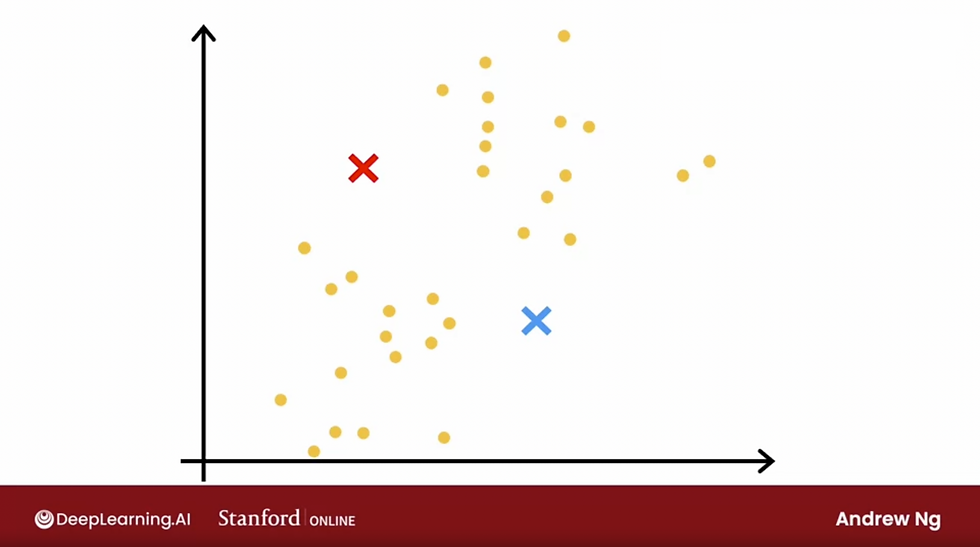

One of the reasons that neural networks have been so successful is because of neural networks, together with having large datasets, have given us new ways to address both high bias and variance issues. Large neural networks when trained on small-term moderate sized datasets are low bias machines. Let's take look at a simple recipe to reduce bias/variance when training a neural network:

We previously explored regularization and know that it can help prevent overfitting in a large neural network, let's see how it can be implemented with Tensorflow:

layer_1 = Dense(units=25, activation='relu', kernel_regularizer=L2(0.01))

layer_2 = Dense(units=15, activation='relu', kernel_regularizer=L2(0.01))

layer_3 = Dense(units=1, activation='sigmoid', kernel_regularizer=L2(0.01))

model = Sequential([layer_1, layer_2, layer_3])

Comments