Day 21: TensorFlow Implementation

- eyereece

- Aug 25, 2023

- 3 min read

Updated: Aug 29, 2023

Inference in code

TensorFlow is one of the leading frameworks to implementing deep learning algorithms. In today's section, we will take a look at how we can implement inferencing code using TensorFlow.

One of the remarkable things about neural networks is the same algorithm can be applied to so many different applications.

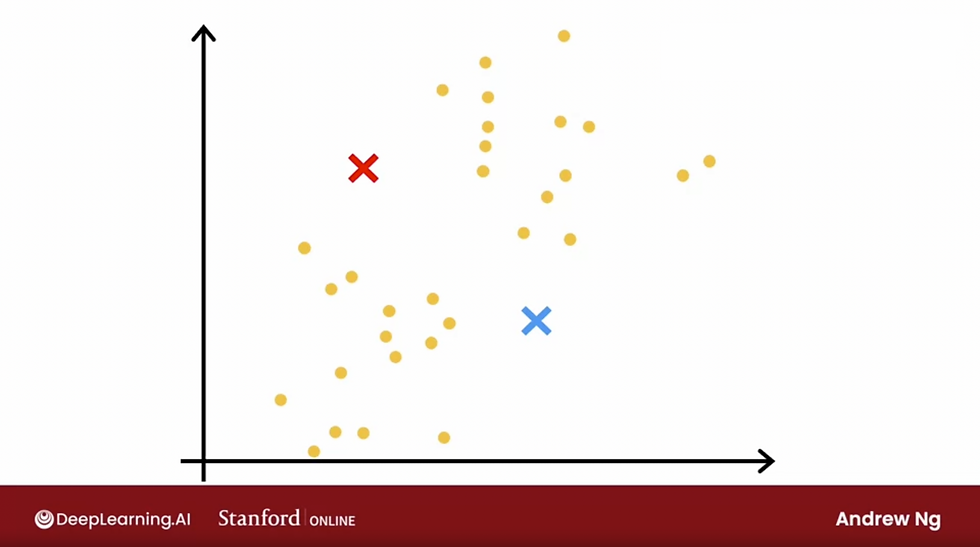

We will use a coffee roasting example:

Can the learning algorithm help optimize the quality of the beans you get from a roasting process?

When you're roasting coffee, you can control 2 parameters that will affect the taste:

duration in minutes

temperature in Celcius

The task is, given a feature vector x with both temperature and duration, say 200 degree Celcius for 17 minutes, how can we do inference in neural network to get it to tell use whether or not this temperature or duration setting will result in good coffee?

(Take a look at the image above for the diagram of this simple neural network)

x = np.array([[200.0, 17.0]])

layer_1 = Dense(units = 3, activation = "sigmoid")

a1 = layer_1(x)Dense() is another name for layer in neural network

layer_1 = Dense(units = 3, activation = "sigmoid")This creates layer 1, with 3 units or neurons, and activation is calculated with sigmoid function. In this code snippet below, we compute a1 by taking layer 1 (which is a function and apply this function to the values of x)

a1 = layer_1(x)The result of a1 will look like this (for illustration purposes only):

Repeat for the next layer:

layer_2 = Dense(units = 1, activation = "sigmoid")

a2 = layer_2(a1)For prediction:

if a2 >= 0.5:

yhat = 1

else:

yhat = 0Let's look at another example with our model for digit classification from yesterday:

import numpy as np

X = np.array([[0.0, ...245, ...240...0]])

layer_1 = Dense(units = 25, activation = "sigmoid")

a1 = layer_1(x)

layer_2 = Dense(units = 15, activation = "sigmoid")

a2 = layer_2(a1)

layer_3 = Dense(units = 1, activation = "sigmoid")

a3 = layer_3(a2)

if a3 >= 0.5:

yhat = 12

else:

yhat = 0Building a Neural Network

Let's look at the coffee roast data again:

temperature (Celcius) | duration (minutes) | y |

200 | 17 | 1 |

120 | 5 | 0 |

425 | 20 | 0 |

212 | 18 | 1 |

layer_1 = Dense(units = 3, activation = "sigmoid")

layer_2 = Dense(units = 1, activation = "sigmoid")

model = Sequential([layer_1, layer_2])Instead of manually taking the data and passing it to layer_1 and then taking the activations from layer_1 and pass it to layer_2, we can instead tell TensorFlow that we would like it to take layer_1 and layer_2 and string them together to form a Neural Network

x = np.array([[200.0, 17.0],

[120.0, 5.0],

[425.0, 20.0],

[212.0, 18.0]])

y = np.array([1, 0, 0, 1])If you want to train the neural network given this data, all you need to do is call this function:

model.compile(...) # more on this lateror the below,

model.fit(x, y)model.fit() tells TensorFlow to take this neural network that's created by sequentially string together layer_1 and layer_2, and to train it on the data x and y

model.predict(x_new) carries out forward propagation and inference for you, using this neural network that you compiled using the sequential function.

By convention, this is how we will usually write the code:

model = Sequential([

Dense(units = 3, activation = "sigmoid"),

Dense(units = 1, activation = "sigmoid")])General Implementation of forward propagation

Let's look at more general implementation of forward propagation in Python. We'll define the dense function:

it takes as input the activation from previous layers, as well as the parameters w and b for the neurons in a given layer.

Then, it output the activations from the current layer

def dense(a_in, W, b):

units = W.shape[1] # 3 units

a_out = np.zeros(units)

for j in range(units):

w = W[:, j] # this pulls out the jth column in W

z = np.dot(w, a_in) + b[j]

a_out[j] = g(z) # g() defined outside of dense()

return a_outGiven the dense function, let's string together a few dense layers sequentially, in order to implement forward propagation in neural network:

def sequential(x):

a1 = dense(x, W1, b1)

a2 = dense(a1, W2, b2)

a3 = dense(a2, W3, b3)

a4 = dense(a3, W4, b4)

f_x = a4

return f_x

Comments