Day 39: Tree Ensembles

- eyereece

- Jan 3, 2024

- 3 min read

Using Multiple Decision Trees

One of the weaknesses of using a single decision tree is that decision tree can be highly sensitive to small changes in the data. One solution to make the algorithm less sensitive or more robust is to build a lot of decision trees, we call that a tree ensemble.

Sampling with Replacement

In order to build a tree ensemble, we are going to need a technique called sampling with replacement. The process of sampling with replacement:

Let's say you have an opaque black bag that contains 4 tokens: red, green, blue, yellow

You grab one out of the bag without looking and got yellow

You placed yellow back in, and grabbed one out again and got blue

You repeat this process 4 times, and got the result: yellow, blue, blue, green

You notice that out of the four times you grabbed, blue appeared twice and red did not appear at all, that's okay, and it's a part of the sampling with replacement procedure.

The process of sampling with replacement lets you construct a new training set that's a little similar to, but also pretty different from your original training set. It turns out that this would be the key building block for building an ensemble of trees

Random Forest Algorithm

Given training set of size m:

for b=1 to B: (we do this B times)

Use sampling with replacement to create a new training set of size m

Train a decision tree on the new dataset

Typical choice of B (the number of trees you build) might be around 100 (64 - 228 is the recommendation), you would then try to make a prediction by getting these trees to vote for prediction. Setting B to a larger number never hurts performance, but beyond a certain point, it doesn't get much better and may slow down computation. This specific instantiation of tree ensemble is called bag decision tree.

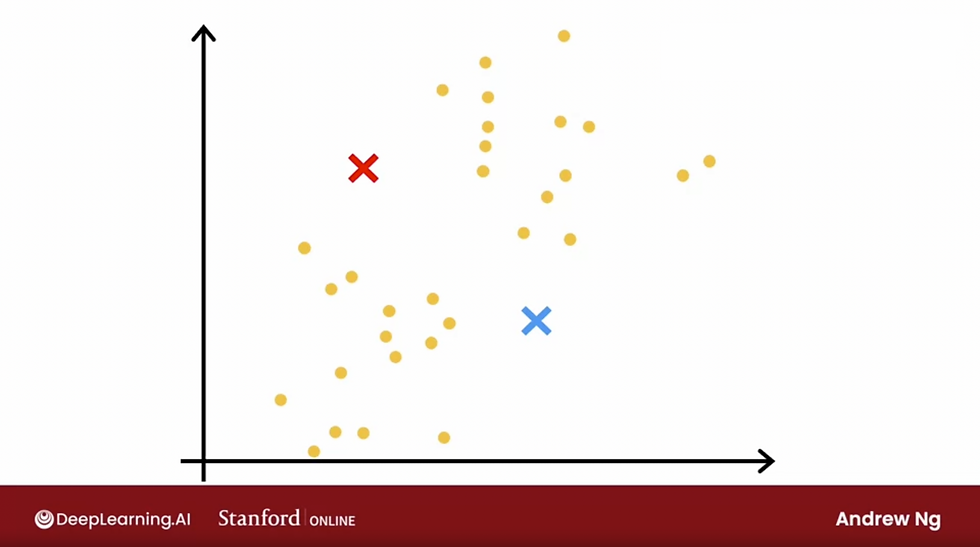

There's one modification to this algorithm that will make it better, which will be the random forest algorithm. The key is, even with the sampling with replacement procedure, sometimes you end up with always using the same split at the root node and very similar splits near the root node.

Random Forest Algorithm: at each node, when choosing a feature to split, if n features are available, pick a random subset of k < n features and allow the algorithm to only choose from that subset of features. When n is large, let's say n is 12 or 100+. A typical choice for the value of k is square-root of n.

One way to think about why random forest algorithm is more robust than a single decision tree is that sampling with replacement procedure causes the algorithm to explore a lot of small changes to the data already, and it's training different decision trees and is averaging over all of those changes to the data that the sampling with replacement procedure causes.

So this means that any changes further to the training set makes it less likely to have a huge impact on the overall output of the algorithm.

XGBoost

By far, the most common implementation of decision tree ensembles is an algorithm called XGBoost.

Boosted Tree:

Given training set of size m

for b=1 to B:

Use sampling with replacement to create a new training set of size m but instead of picking from all examples with equal (1/m) probability, make it more likely to pick misclassified examples from previously trained trees

Train a decision tree on the new dataset

So, rather than looking at all the training examples, we focus more attention on the subset of examples that is not doing well yet, and get the next decision tree ensemble to do well on them. There are different ways of implementing boosting, the most widely used is XGBoost.

XGBoost (Extreme Gradient Boosting):

Open Source Implementation of boosted trees

Fast efficient Implementation

Good choice of default splitting criteria and criteria for when splitting

Built in regularization to prevent overfitting

Highly competitive algorithm for ML competitions

An example of classification implementation:

from xgboost import XGBClassifier

model = XGBClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)Regression Implementation:

from xgboost import XGBRegressor

model = XGBRegressor()

model.fit(X_train, y_train)

y_pred = model.predict(x_test)With XGBoost, rather than doing sampling with replacement, XGBoost actually assigns different weights to different training examples.

Sample Kaggle notebooks on XGBoost:

When to use Decision Trees

Decision trees and tree ensembles:

Works well on tabular (structured) data

Not recommended for unstructured data (images, audio, text)

Fast

Small decision trees may be human interpretable

Neural Networks:

Works well on all types of data, including tabular (structured) and unstructured data

May be slower than decision tree

Works with transfer learning

When building a system of multiple models working together, it might be easier to string together multiple neural networks.

Comments