Day 27: Multi-class Classification

- eyereece

- Sep 27, 2023

- 2 min read

Multi-class

Multi-class classification refers to classification problems where you can have more than just 2 possible output labels, so not just zero or one. Some examples include:

When trying to read postal or zip codes on an envelope

Classify whether a patient may have any 3 or more possible diseases

Visual defect inspection of part manufacturer in a factory

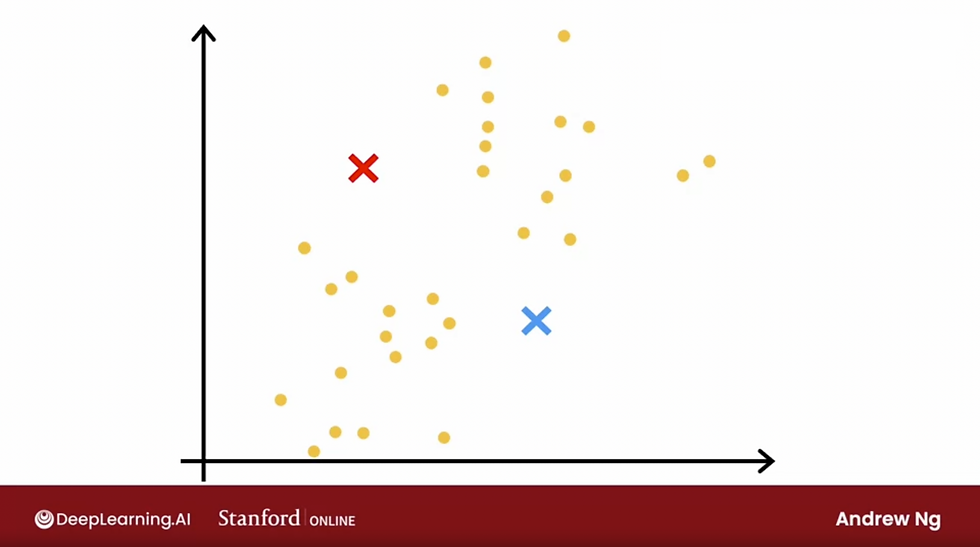

So, multi-class classification is still a classification problem, as y can take on a small discrete categories, not just any number, but now, y can take more than just 2 possible values. The plot may look something like this:

Softmax

The softmax regression algorithm is a generalization of logistic regression, which is a binary classification algorithm to the multi-class classification contexts.

Take a look at the difference of the formula between logistic regression with only 2 possible outputs and softmax regression with N possible outputs on the left side of the image below:

The right side of the image above shows the computation of the softmax regression with 4 possible outputs. Note how the total of the possible outputs equal to 1.

For example,

a1 = probability of the input being a picture of a dog

a2 = probability of the input being a picture of a cat

a3 = probability of the input being a picture of a bird

a4 = probability of the input being neither a dog, a cat, nor a bird.

in the example image above:

a1 = 0.30

a2 = 0.20

a3 = 0.15

a4 = 0.35

the total of a1 + a2 + a3 + a4 is equal to 1, based on the output result, the highest probability is a4, which means the input is neither a dog, a cat, nor a bird.

Let's take a look at the cost and loss function for logistic regression (2 possible outputs) vs softmax regression (N possible outputs):

In logistic regression, y can only be either 1 or 0 (per the image above).

In softmax regression, y can be 1 or 2 or 3 or ... N.

Take a look at the Crossentropy loss plot:

if aj was very close to 1, then loss will be very small

but if aj had only 50% chance, then the loss gets a little bigger

the smaller aj in, the bigger the loss

this incentivizes the algorithm to make aj as large as possible (or as close to 1 as possible.

because whatever the actual value y was, you want the algorithm to say, hopefully, that the chance of y being that value was pretty large.

Comments