Day 31: Model Selection and training/cross-validation/test sets

- eyereece

- Oct 17, 2023

- 3 min read

In previous article, we saw how to use the test set to evaluate the performance of a model. Let's make one further refinement to that idea, which will allow us to use the technique, to automatically choose a good model for your machine learning algorithm.

One thing we have seen is that, once parameters (w, b) are fit to the training set, the training error is likely lower than the actual generalization error. The test error is a better estimate of how well the model will generalize to new data compared to training error.

The training error may not be a good indicator of how well the algorithm will do or how well it will generalize to new examples that were not in the training set. Actual generalization error mean the average error on new examples that were not in the training set. Something new we're going to introduce in this section is the cross-validation (cv) set.

Recall that in our previous article, we split the dataset into 70/30 where 70% of the dataset goes to the training set, and 30% of the dataset goes to the test set. To split the dataset into train/cv/test set, we can split them into 60/20/20. 80/10/10 or 90/5/5 is also commonly used depending on the total dataset.

The name cross-validation refers to the dataset that you're going to use to check or cross-check the validity or accuracy of the model. It may also be referred to as validation set, development set, or dev set.

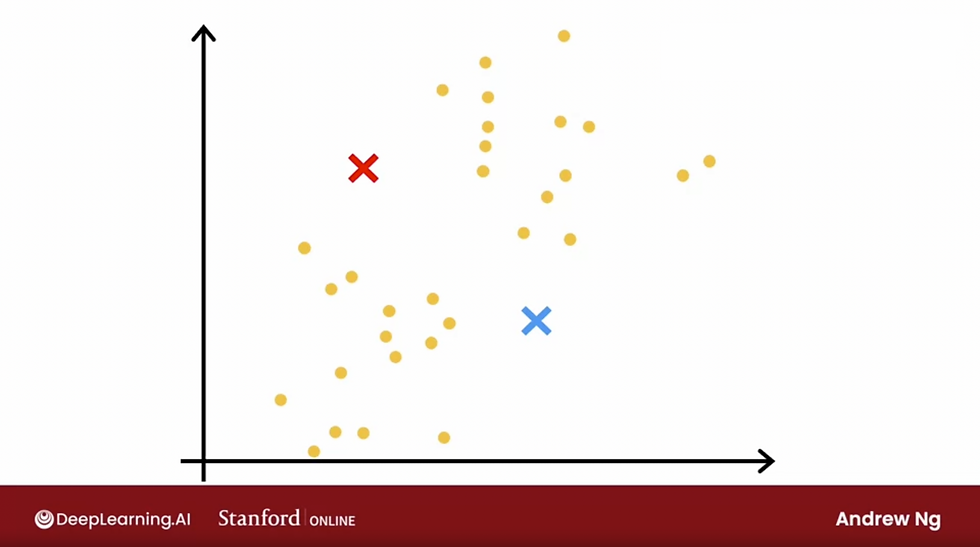

Armed with the three measures of algorithm performance, we can then carry out our model selection. Let's say we have 10 models. You can then fit the parameters on your cv set and compute the cross-validation loss. In order to choose a model, you will look at which model has the lowest cv error.

Finally, if you want to report an estimate of the generalization error of how well this model will do on new data, you will do so using the third subset of your data (test set) and report out the test loss.

The procedure in model selection for neural networks is as follows:

Divide your data into subsets (train/cv/test)

List possible models or neural network architectures

Train your model on these architectures or models and end up with parameters (weights and biases)

Evaluate the performance with cv error using the cv set, compute these using all your models and then pick the model with the lowest cross-validation error.

If, for example, you have 3 neural network models, and neural network 2 has the lowest cv error, you will pick that and use parameters used or trained on this model.

Finally, if you want to report out an estimate of the generalization error, you then use the test set to estimate how well the neural network that you choose will do.

It's considered best practice in machine learning that if you have to make decisions about your model, such as fitting parameters or choosing the model architecture, to make all the decisions only using your training set and your cv set, and to not look at the test set while you're still making decisions regarding your learning algorithm.

It's only after you have come up with one model as your final model to only then evaluate it on the test set, because you haven't made any decisions using the test set, that ensures that your test set is fair and not overly optimistic estimate of how well your model will generalize to new data.

Comments