Day 32: Bias and Variance

- eyereece

- Oct 19, 2023

- 2 min read

The typical workflow of developing a machine learning system is that you have an idea and you train the model, and you almost always find that it doesn't work as well as you wish yet. The key process of building an ML system is how to decide what to do next in order to improve its performance.

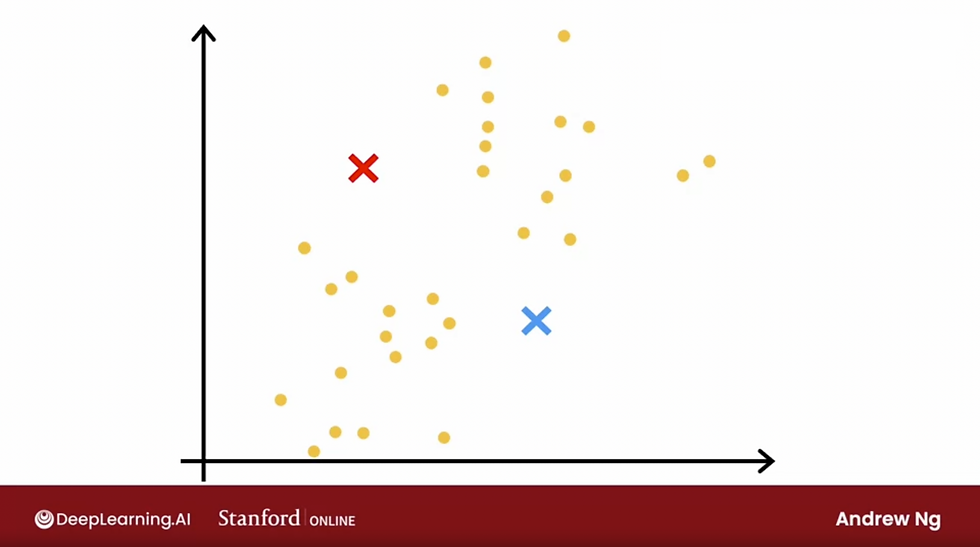

Looking at the bias and variance of a learning algorithm gives you good guidance on what to try next. A systematic way to diagnose or find out if your algorithm has high bias (underfit) or high variance (overfit) will be to compare the performance of your algorithm on the train set vs the cross-validation set.

High Bias (Underfit)

Some characteristics of an algorithm will high bias (underfitting):

It's not doing well on the training set (train error is high, cv error is high as well)

High Variance (Overfit)

Some characteristics of an algorithm will high variance (overfitting):

It's doing well on the training set but not in the cv set (train error is low, cv error is high)

Regularization and bias/variance

Let's take a look at how regularization, specifically the choice of the regularization parameter (lambda) affects the bias and variance, and therefore the overall performance for your algorithm.

This will be helpful when you want to choose a good value of lambda or the regularization parameter for your algorithm.

In this example, we're going to use a fourth-order polynomial, but we're going to fit this model using regularization, where the value of lambda is the regularization parameter that controls how much you trade-off keeping the parameters small versus. fitting the training data well.

Let's take a look at an example:

If we set lambda very high, then the algorithm is highly motivated to keep the parameters w very small, and you end up with these parameters being very close to 0. The model ends up being f(x) == b (approximately, as b is a constant value). The model will have a high bias and underfit the data.

To find a good value for the regularization parameter:

Try out a large range of possible values for lambda

Fit parameters using different regularization parameters

Evaluate the performance on the cross-validation set

Pick the best value for the regularization parameter

Let's say you've trained a few different models and find that the cv error of model_5 has the lowest value of all of these cv errors, you may then choose to pick this value of lambda, and use model_5 as chosen parameters.

Finally, if you want to report out an estimate of the generalization error, you would then report out the test error of model_5.

Comments