Day 33: Establishing a baseline level of performance

- eyereece

- Oct 19, 2023

- 2 min read

Let's take a look at some concrete numbers for what train-error and cv-error might be and see how you can judge if a learning algorithm has high bias or high variance.

Let's start with an example of a speech recognition system:

A lot of users doing web search on the phone will use speech recognition

Typical audio we would get would be, "What is today's weather?" or "coffee shops near me"

It's the job of the speech recognition algorithms to output the transcripts whether it's today's weather or coffee shops near me.

Let's say, you were to train the speech recognition system and measure the training error, and you get the results of this training error to be 10.8% (which means, it transcribes perfectly 89.2% of your training set) and cv-error is 14.8 %. So, is this good or bad?

When analyzing speech recognition, it's useful to also measure one other thing, which is the human level of performance. In other words, how well can humans transcribe speech accurately from these audio clips?

Let's say human achieves 10.6% error, so, in this example, our train-error only did 0.2% worse than humans, but in contrast, the difference between cv-error and train-error is much larger, given that the algorithm does well with 'human level performance' benchmark, but differs greatly compared to cv-error, it has a high-variance/overfitting problem.

Baseline level of performance is the level of error you can reasonably hope your algorithm to eventually get to.

You can establish a baseline level of performance with the following:

human level performance

competing algorithm performance

Human level performance is often a good benchmark when you're using unstructured data, such as, audio, images, or texts. Competing algorithms mean previous implementation that someone else has implemented or a competitor's algorithm.

When judging if an algorithm has high bias or variance, you would look at the baseline level of performance, the train-error, and the cv-error. The 2 key performance to measure are:

What's the difference between train-error and baseline level?

What's the difference between train-error and cv-error?

Let's a look at some examples:

The following model has a high variance (overfitting) issue:

Baseline: 10.6%

Train-error: 10.8%

CV-error: 14.8%

The following model has a high bias (underfitting) issue:

Baseline: 10.6%

Train-error: 15.0%

CV-error: 15.5%

The following model has high bias (undefitting) and high variance (overfitting) issue:

Baseline: 10.6%

Train-error: 15.0%

CV-error: 19.7%

A good way to measure the accuracy of your algorithm is, rather than asking if the training error is too high, ask if the training error is large relative to what you're hoping you can eventually get.

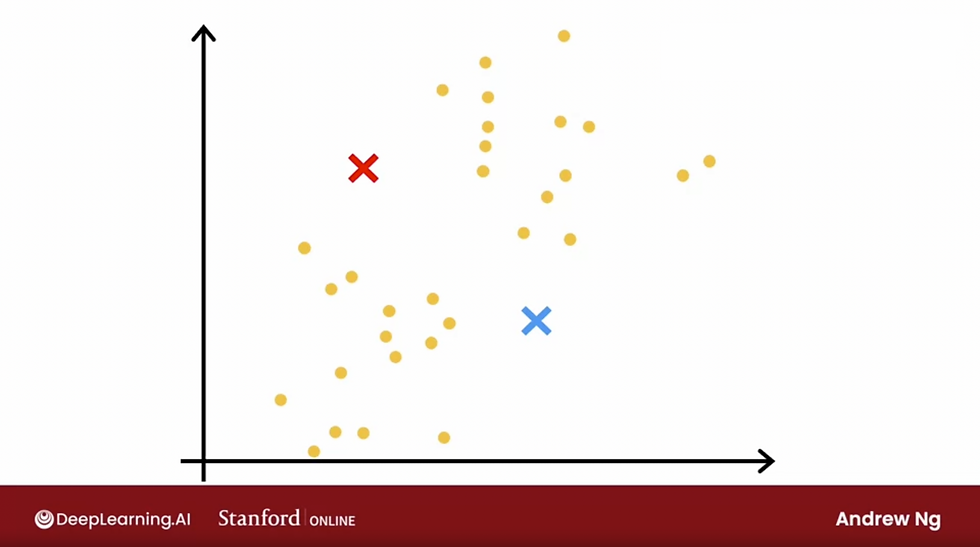

Take a look at the following lecture on Learning Curves from Andrew Ng to help you understand how your learning algorithm is doing: Lecture 0606 Learning Curves

Comments