Day 36: Decision Trees

- eyereece

- Nov 15, 2023

- 3 min read

In this section, we will learn about a practical and very commonly used learning algorithm: the decision tree. We will also learn about variations of the decision tree.

Learning Objectives:

see what a decision tree looks like and how ti can be used to make predictions

learn how a decision tree learns from training data

learn the "impurity" metric "entropy" and how it's used when building a decision tree

learn when to use decision trees and neural network.

Decision Tree Model

To explain how decision trees work, let's take a look at a cat classification example:

Ear Shape (x1) | Face Shape (x2) | Whiskers (x3) | Cat (y) |

Pointy (PT) | Round (R) | Present (P) | 1 |

Floppy (FP) | NR (Not Round) | P | 1 |

FP | R | Absent (A) | 0 |

PT | NR | P | 0 |

PT | R | P | 1 |

PT | R | A | 1 |

FP | NR | A | 0 |

PT | R | A | 1 |

FP | R | A | 0 |

FP | R | A | 0 |

This is a binary classification task because the labels are one or zero, and here's an example of a decision tree model that you might get after training a decision tree algorithm on the data above:

Decision Nodes: they look at a particular feature and based on the value of the feature, causes you to decide whether to go left or right down the tree

Leaf Nodes: They make a prediction

There are many different form of decision trees. Among these different decision trees, some will do better and some will do worse on the training sets or cross-validation (cv) sets and test sets.

The job of the decision tree learning algorithm is, out of all possible decision trees, to try to pick one that hopefully does well on the training set, and then also ideally generalizes well to new data such as your cv and test sets as well.

Learning Process

The first step in decision tree learning is to decide what feature to use at the root node. The feature we choose for the nodes are usually done through an algorithm.

Let's say, we pick the ear shape as the feature on the root node. What we'll do then, is to look at all of our training examples, and split them according to the value of the ear shape feature: pointy or floppy.

The second step is focusing on just the left branch of the decision tree to decide what nodes to put there. In particular, what feature we want to split on or what feature to use next. Let's say, through the algorithm, we decide to use the face shape: round or not round.

We will then take the examples that fit the features and move them, we will continue doing this until we reach a prediction, in which we will then repeat a similar process on the right branch. This is the process of building a decision tree.

Through this process, there are 2 key decisions that we had to make at various steps during the algorithm:

How to choose what feature to split on at each node?

When do you stop splitting

To choose what feature to split on:

decision trees will choose what feature to split on to maximize purity

purity means: you want to get what subsets? all dogs or all cats?

When to stop splitting:

when a node is 100% one class (100% dogs or cats)

when splitting a node will result in the tree exceeding a maximum depth: this makes sure the tree doesn't get too big or unwieldy and makes it less prone to overfitting

when improvements in purity score are below a threshold

when number of examples in a node is below a threshold

Decision Tree Learning

Let's take a look at a way to measure the purity of a set of examples. If the examples are all cats of a single class, then that's very pure, and so is "all not cats".

Entropy is a measure of the impurity of a set of data.

Let's say, given a set of 6 examples, 3 cats and 3 dogs:

P1 = fraction of examples that are all cats

In this example P1 is 3/6 = 0.5

The function of Entropy = H(P1)

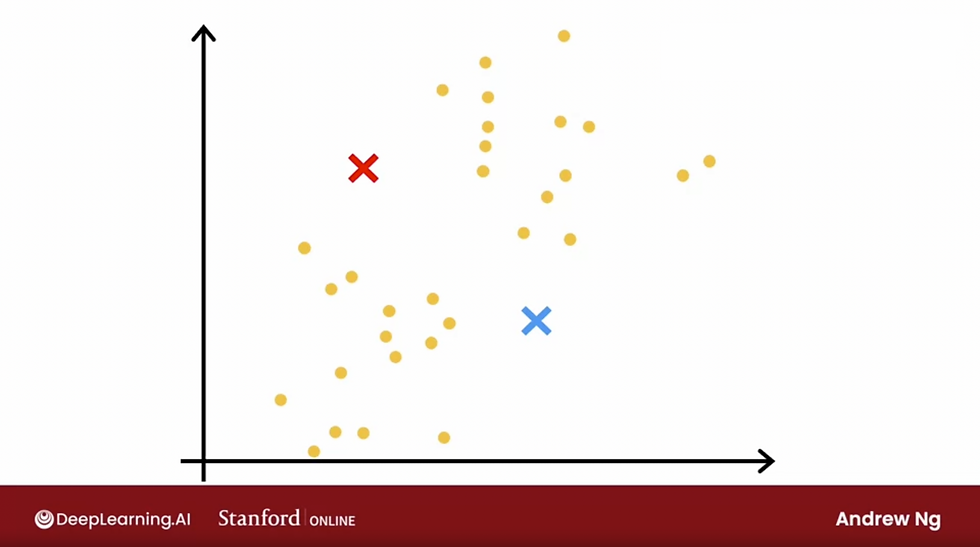

Let's take a look at the plot:

In the illustration above, the vertical axis is the H

Thus in our example,

H(P1) = H(3/6) = 1

If you notice in the curve of entropy:

It's most impure when your set is 50/50

It's most pure (entropy=0) when your set is 0.0 or 1.0

P0 if a fraction of examples that are not P1, so if P1 = 2/3 cats, P0 = 1/3 cat

P0 = 1 - P1

The function of entropy:

image taken from the course: Deeplearning.ai

Technically log 0 is undefined or infinity, but by convention for the purposes of computing entropy, we'll take "0log(0) = 0", and that will comput the entropy as 0 or as 1=0.

To summarize, the entropy function is a measure of the impurity of a set of data. It starts from 0, goes up to 1, and then comes back down to 0 as a function of the fraction of positive examples in your sample.

Comments